AI Function Calling

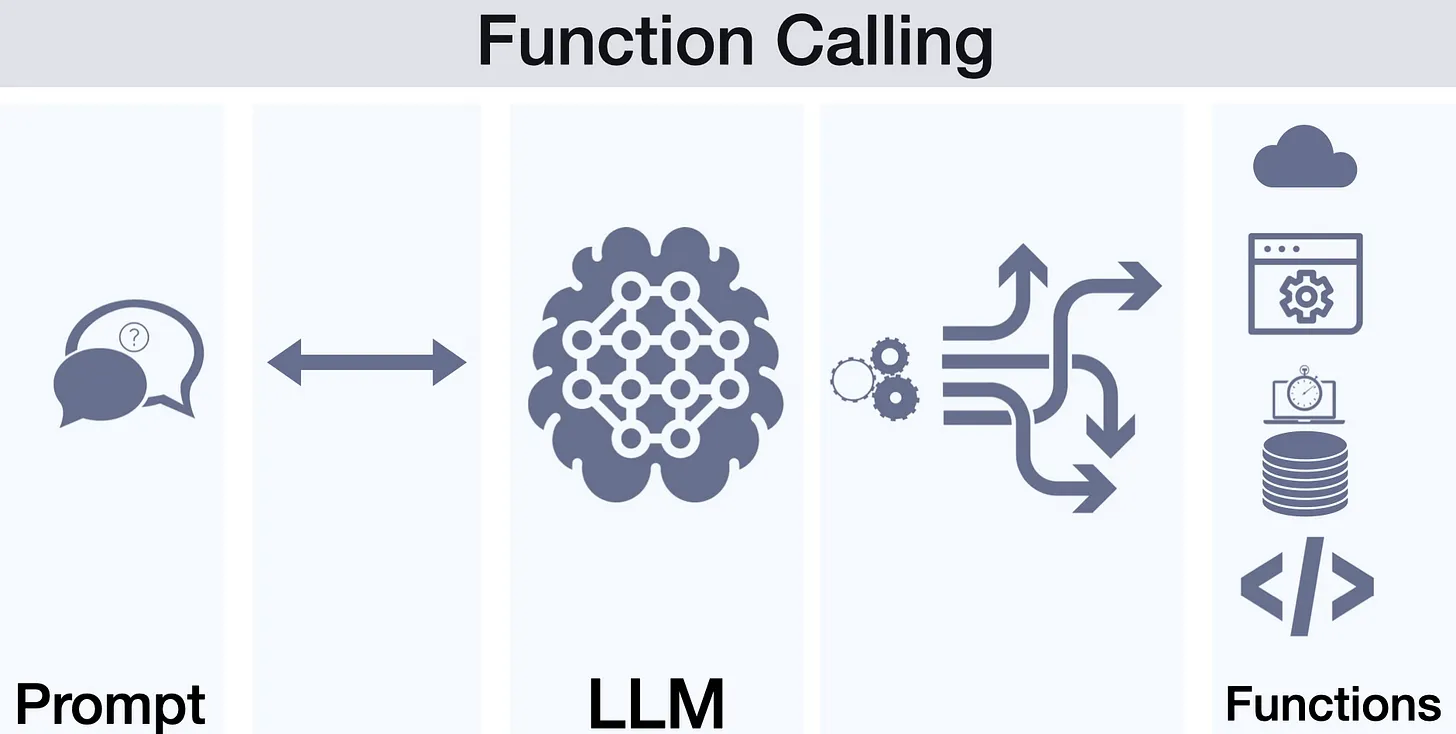

@autobe fundamentally prefers AI function calling to generate AST (Abstract Syntax Tree) data over having AI write raw programming code as text. The system validates the AST data generated through AI function calling, provides feedback to the AI for correction when errors are detected, and finally converts the validated AST data into actual programming code.

Therefore, the success of @autobe depends on three critical factors. First, how precisely and efficiently we can create AI function calling schemas. Second, how detailed and accurate validation feedback we can provide to the AI when it generates incorrect AST data. Finally, how clearly we can communicate the coding rules that @autobe must follow while constructing code (composing AST data) to the AI.

To address these challenges, @autobe adopts typia to generate AI function calling schemas at the compiler level. The compiler integrated with typia not only creates AI function calling schemas but also generates validation functions for each type. Moreover, the coding rules that @autobe must follow are embedded as comments in each AST type. These comments are recorded as type descriptions when typia converts TypeScript types into AI function calling schemas.

This approach creates a comprehensive development story that ensures both type safety and rule compliance through compiler-driven automation.

Schema Made by Compiler

Schema Generation

import { AutoBeOpenApi, AutoBeDatabase } from "@autobe/prisma";

import { ILlmApplication } from "@samchon/openapi";

import typia from "typia";

const app: ILlmApplication<"chatgpt"> = typia.llm.application<

ICodeGenerator,

"chatgpt",

{ reference: true }

>();

console.log(app);

interface ICodeGenerator {

/**

* Generate Prisma AST application for database schema design.

*/

generatePrismaSchema(app: AutoBeDatabase.IApplication): void;

/**

* Generate OpenAPI document for RESTful API design.

*/

generateOpenApiDocument(app: AutoBeOpenApi.IDocument): void;

generateTestFunction(func: AutoBeTest.IFunction): void;

}undefined

import { tags } from "typia";

import { CamelCasePattern } from "../typings";

import { SnakeCasePattern } from "../typings/SnakeCasePattern";

/**

* AST type system for programmatic Prisma ORM schema generation through AI

* function calling.

*

* This namespace defines a comprehensive Abstract Syntax Tree structure that

* enables AI agents to construct complete Prisma schema files at the AST level.

* Each type corresponds to specific Prisma Schema Language (PSL) constructs,

* allowing precise control over generated database schemas while maintaining

* type safety and business logic accuracy.

*

* ## Core Purpose

*

* The system is designed for systematic generation where AI function calls

* build database schemas step-by-step, mapping business requirements to

* executable Prisma schema code. Instead of generating raw PSL strings, AI

* agents construct structured AST objects that represent:

*

* - Complete database schemas organized by business domains

* - Properly typed models with relationships and constraints

* - Performance-optimized indexes for common query patterns

* - Business-appropriate data types and validation rules

*

* ## Architecture Overview

*

* - **IApplication**: Root container representing the entire database schema

* - **IFile**: Domain-specific schema files organized by business functionality

* - **IModel**: Database tables representing business entities with full

* relationship mapping

* - **Fields**: Primary keys, foreign keys, and business data fields with proper

* typing

* - **Indexes**: Performance optimization through unique, regular, and full-text

* search indexes

*

* ## Domain-Driven Schema Organization

*

* Schemas are typically organized into multiple domain-specific files following

* DDD principles:

*

* - Core/System: Foundation entities and application configuration

* - Identity: User management, authentication, and authorization

* - Business Logic: Domain-specific entities and workflows

* - Transactions: Financial operations and payment processing

* - Communication: Messaging, notifications, and user interactions

* - Content: Media management, documentation, and publishing systems

* - Analytics: Reporting, metrics, and business intelligence data

*

* ## Database Design Patterns

*

* The generated schemas follow enterprise-grade patterns:

*

* - UUID primary keys for distributed system compatibility and security

* - Snapshot/versioning tables for audit trails and data history

* - Junction tables for many-to-many relationship management

* - Materialized views for performance optimization of complex queries

* - Soft deletion patterns with timestamp-based lifecycle management

* - Full-text search capabilities using PostgreSQL GIN indexes

*

* Each generated schema reflects real business workflows where entities

* maintain proper relationships, data integrity constraints, and performance

* characteristics suitable for production applications handling complex

* business logic and high query volumes.

*

* @author Samchon

*/

export namespace AutoBeDatabase {

/**

* Root interface representing the entire database application schema.

*

* Contains multiple schema files that will be generated, typically organized

* by business domain. Based on the uploaded schemas, applications usually

* contain 8-10 files covering different functional areas like user

* management, sales, orders, etc.

*/

export interface IApplication {

/**

* Array of Prisma schema files to be generated.

*

* Each file represents a specific business domain or functional area.

* Examples from uploaded schemas: systematic (channels/sections), actors

* (users/customers), sales (products/snapshots), carts, orders, coupons,

* coins (deposits/mileage), inquiries, favorites, and articles (BBS

* system).

*/

files: IFile[];

}

/**

* Interface representing a single Prisma schema file within the application.

*

* Each file focuses on a specific business domain and contains related

* models. File organization follows domain-driven design principles as seen

* in the uploaded schemas.

*/

export interface IFile {

/**

* Name of the schema file to be generated.

*

* Should follow the naming convention: "schema-{number}-{domain}.prisma"

* Examples: "schema-02-systematic.prisma", "schema-03-actors.prisma" The

* number indicates the dependency order for schema generation.

*/

filename: string & tags.Pattern<"^[a-zA-Z0-9._-]+\\.prisma$">;

/**

* Business domain namespace that groups related models.

*

* Used in Prisma documentation comments as "@\namespace directive".

* Examples from uploaded schemas: "Systematic", "Actors", "Sales", "Carts",

* "Orders", "Coupons", "Coins", "Inquiries", "Favorites", "Articles"

*/

namespace: string;

/**

* Array of Prisma models (database tables) within this domain.

*

* Each model represents a business entity or concept within the namespace.

* Models can reference each other through foreign key relationships.

*/

models: IModel[] & tags.MinItems<1>;

}

/**

* Interface representing a single Prisma model (database table).

*

* Based on the uploaded schemas, models follow specific patterns:

*

* - Main business entities (e.g., shopping_sales, shopping_customers)

* - Snapshot/versioning entities for audit trails (e.g.,

* shopping_sale_snapshots)

* - Junction tables for M:N relationships (e.g.,

* shopping_cart_commodity_stocks)

* - Materialized views for performance (prefixed with mv_)

*/

export interface IModel {

/**

* Name of the Prisma model (database table name).

*

* MUST use snake_case naming convention. Examples: "shopping_customers",

* "shopping_sale_snapshots", "bbs_articles" Materialized views use "mv_"

* prefix: "mv_shopping_sale_last_snapshots"

*/

name: string & SnakeCasePattern;

/**

* Detailed description explaining the business purpose and usage of the

* model.

*

* Should include:

*

* - Business context and purpose

* - Key relationships with other models

* - Important behavioral notes or constraints

* - References to related entities using "{@\link ModelName}" syntax

*

* **IMPORTANT**: Description must be written in English. Example: "Customer

* information, but not a person but a **connection** basis..."

*/

description: string;

/**

* Indicates whether this model represents a materialized view for

* performance optimization.

*

* Materialized views are read-only computed tables that cache complex query

* results. They're marked as "@\hidden" in documentation and prefixed with

* "mv_" in naming. Examples: mv_shopping_sale_last_snapshots,

* mv_shopping_cart_commodity_prices

*/

material: boolean;

/**

* Specifies the architectural stance of this model within the database

* system.

*

* This property defines how the table positions itself in relation to other

* tables and what role it plays in the overall data architecture,

* particularly for API endpoint generation and business logic

* organization.

*

* ## Values:

*

* ### `"primary"` - Main Business Entity

*

* Tables that represent core business concepts and serve as the primary

* subjects of user operations. These tables typically warrant independent

* CRUD API endpoints since users directly interact with these entities.

*

* **Key principle**: If users need to independently create, search, filter,

* or manage entities regardless of their parent context, the table should

* be primary stance.

*

* **API Requirements:**

*

* - Independent creation endpoints (POST /articles, POST /comments)

* - Search and filtering capabilities across all instances

* - Direct update and delete operations

* - List/pagination endpoints for browsing

*

* **Why `bbs_article_comments` is primary, not subsidiary:**

*

* Although comments belong to articles, they require independent

* management:

*

* - **Search across articles**: "Find all comments by user X across all

* articles"

* - **Moderation workflows**: "List all pending comments for review"

* - **User activity**: "Show all comments made by this user"

* - **Independent operations**: Users edit/delete their comments directly

* - **Notification systems**: "Alert when any comment is posted"

*

* If comments were subsidiary, these operations would be impossible or

* require inefficient nested queries through parent articles.

*

* **Characteristics:**

*

* - Represents tangible business concepts that users manage

* - Serves as reference points for other tables

* - Requires comprehensive API operations (CREATE, READ, UPDATE, DELETE)

* - Forms the backbone of the application's business logic

*

* **Examples:**

*

* - `bbs_articles` - Forum posts that users create, edit, and manage

* - `bbs_article_comments` - User comments that require independent

* management

*

* ### `"actor"` - Authenticated Actor Entity

*

* Tables that represent a true actor in the system (a distinct user type

* with its own authentication flow, table schema, and business logic).

* Actor tables are the canonical identity records used across the system,

* and their sessions are recorded in a separate session table.

*

* **Key principle**: If the user type requires a distinct table and

* authentication flow (not just an attribute), the table is an actor.

*

* **Characteristics:**

*

* - Serves as the primary identity record for that actor type

* - Owns credentials and actor-specific profile data

* - Has one-to-many sessions stored in a dedicated session table

* - Used as the root of permission and ownership relationships

*

* **Examples:**

*

* - `users` - Standard application users with authentication

* - `shopping_customers` - Customers with login and purchase history

* - `shopping_sellers` - Sellers with business credentials

* - `administrators` - Admins with elevated permissions

*

* ### `"session"` - Actor Session Entity

*

* Tables that represent login sessions for a specific actor. A session

* table always belongs to exactly one actor type and contains connection

* context and temporal fields for auditing.

*

* **Key principle**: A session table exists only to track actor logins and

* must reference exactly one actor table.

*

* **Characteristics:**

*

* - Child of a single actor table (many sessions per actor)

* - Stores connection metadata (IP, headers, referrer)

* - Append-only audit trail of login events

* - Managed through authentication flows, not direct user CRUD

*

* **Examples:**

*

* - `user_sessions` - Sessions for `users`

* - `shopping_customer_sessions` - Sessions for `shopping_customers`

*

* ### `"subsidiary"` - Supporting/Dependent Entity

*

* Tables that exist to support primary entities but are not independently

* managed by users. These tables are typically managed through their parent

* entities and may not need standalone API endpoints.

*

* **Characteristics:**

*

* - Depends on primary or snapshot entities for context

* - Often managed indirectly through parent entity operations

* - May have limited or no independent API operations

* - Provides supporting data or relationships

*

* **Examples:**

*

* - `bbs_article_snapshot_files` - Files attached to article snapshots

* - `bbs_article_snapshot_tags` - Tags associated with article snapshots

* - `bbs_article_comment_snapshot_files` - Files attached to comment

* snapshots

*

* ### `"snapshot"` - Historical/Versioning Entity

*

* Tables that capture point-in-time states of primary entities for audit

* trails, version control, or historical tracking. These tables record

* changes but are rarely modified directly by users.

*

* **Characteristics:**

*

* - Captures historical states of primary entities

* - Typically append-only (rarely updated or deleted)

* - Referenced for audit trails and change tracking

* - Usually read-only from user perspective

*

* **Examples:**

*

* - `bbs_article_snapshots` - Historical states of articles

* - `bbs_article_comment_snapshots` - Comment modification history

*

* ## API Generation Guidelines:

*

* The stance property guides automatic API endpoint generation:

*

* - **`"actor"`** → Generate identity and authentication endpoints for the

* actor type

* - **`"session"`** → Generate session lifecycle endpoints bound to actor

* authentication flows

* - **`"primary"`** → Generate full CRUD endpoints based on business

* requirements

* - **`"subsidiary"`** → Evaluate carefully; often managed through parent

* entities

* - **`"snapshot"`** → Typically read-only endpoints for historical data

* access

*

* @example

* ```typescript

* // Actor entity - identity and authentication root

* {

* name: "users",

* stance: "actor",

* description: "Authenticated users of the application"

* }

*

* // Session entity - audit log of logins for an actor

* {

* name: "user_sessions",

* stance: "session",

* description: "Login sessions for users"

* }

*

* // Primary business entity - needs full CRUD API

* {

* name: "bbs_articles",

* stance: "primary",

* description: "Forum articles that users create and manage independently"

* }

*

* // Another primary entity - despite being "child" of articles

* {

* name: "bbs_article_comments",

* stance: "primary",

* description: "User comments requiring independent search and management"

* }

*

* // Subsidiary entity - managed through snapshot operations

* {

* name: "bbs_article_snapshot_files",

* stance: "subsidiary",

* description: "Files attached to article snapshots, managed via snapshot APIs"

* }

*

* // Snapshot entity - read-only historical data

* {

* name: "bbs_article_snapshots",

* stance: "snapshot",

* description: "Historical states of articles for audit and versioning"

* }

* ```;

*/

stance: "primary" | "subsidiary" | "snapshot" | "actor" | "session";

//----

// FIELDS

//----

/**

* The primary key field of the model.

*

* In all uploaded schemas, primary keys are always UUID type with "@\id"

* directive. Usually named "id" and marked with "@\db.Uuid" for PostgreSQL

* mapping.

*/

primaryField: IPrimaryField;

/**

* Array of foreign key fields that reference other models.

*

* These establish relationships between models and include Prisma relation

* directives. Can be nullable (optional relationships) or required

* (mandatory relationships). May have unique constraints for 1:1

* relationships.

*/

foreignFields: IForeignField[];

/**

* Array of regular data fields that don't reference other models.

*

* Include business data like names, descriptions, timestamps, flags,

* amounts, etc. Common patterns: created_at, updated_at, deleted_at for

* soft deletion and auditing.

*/

plainFields: IPlainField[];

//----

// INDEXES

//----

/**

* Array of unique indexes for enforcing data integrity constraints.

*

* Ensure uniqueness across single or multiple columns. Examples: unique

* email addresses, unique codes within a channel, unique combinations like

* (channel_id, nickname).

*/

uniqueIndexes: IUniqueIndex[];

/**

* Array of regular indexes for query performance optimization.

*

* Speed up common query patterns like filtering by foreign keys, date

* ranges, or frequently searched fields. Examples: indexes on created_at,

* foreign key fields, search fields.

*/

plainIndexes: IPlainIndex[];

/**

* Array of GIN (Generalized Inverted Index) indexes for full-text search.

*

* Used specifically for PostgreSQL text search capabilities using trigram

* operations. Applied to text fields that need fuzzy matching or partial

* text search. Examples: searching names, nicknames, titles, content

* bodies.

*/

ginIndexes: IGinIndex[];

}

/**

* Interface representing the primary key field of a Prisma model.

*

* All models in the uploaded schemas use UUID as primary key for better

* distributed system compatibility and security (no sequential ID exposure).

*/

export interface IPrimaryField {

/**

* Name of the primary key field.

*

* MUST use snake_case naming convention. Consistently named "id" across all

* models in the uploaded schemas. Represents the unique identifier for each

* record in the table.

*/

name: string & SnakeCasePattern;

/**

* Data type of the primary key field.

*

* Always "uuid" in the uploaded schemas for better distributed system

* support and to avoid exposing sequential IDs that could reveal business

* information.

*/

type: "uuid";

/**

* Description of the primary key field's purpose.

*

* Standard description is "Primary Key." across all models. Serves as the

* unique identifier for the model instance.

*

* **IMPORTANT**: Description must be written in English.

*/

description: string;

/**

* @ignore

* @internal

*/

nullable?: boolean;

}

/**

* Interface representing a foreign key field that establishes relationships

* between models.

*

* Foreign keys create associations between models, enabling relational data

* modeling. They can represent 1:1, 1:N, or participate in M:N relationships

* through junction tables.

*/

export interface IForeignField {

/**

* Name of the foreign key field.

*

* MUST use snake_case naming convention. Follows convention:

* "{target_model_name_without_prefix}_id" Examples: "shopping_customer_id",

* "bbs_article_id", "attachment_file_id" For self-references: "parent_id"

* (e.g., in hierarchical structures)

*/

name: string & SnakeCasePattern;

/**

* Data type of the foreign key field.

*

* Always "uuid" to match the primary key type of referenced models. Ensures

* referential integrity and consistency across the schema.

*/

type: "uuid";

/**

* Description explaining the purpose and target of this foreign key

* relationship.

*

* Should reference the target model using format: "Target model's {@\link

* ModelName.id}" Examples: "Belonged customer's {@\link

* shopping_customers.id}" May include additional context about the

* relationship's business meaning.

*

* **IMPORTANT**: Description must be written in English.

*/

description: string;

/**

* Prisma relation configuration defining the association details.

*

* Specifies how this foreign key connects to the target model, including

* relation name, target model, and target field. This configuration is used

* to generate the appropriate Prisma relation directive in the schema.

*/

relation: IRelation;

/**

* Whether this foreign key has a unique constraint.

*

* True: Creates a 1:1 relationship (e.g., user profile, order publish

* details) false: Allows 1:N relationship (e.g., customer to multiple

* orders) Used for enforcing business rules about relationship

* cardinality.

*/

unique: boolean;

/**

* Whether this foreign key can be null (optional relationship).

*

* True: Relationship is optional, foreign key can be null false:

* Relationship is required, foreign key cannot be null Reflects business

* rules about mandatory vs optional associations.

*/

nullable: boolean;

}

/**

* Interface representing a Prisma relation configuration between models.

*

* This interface defines how foreign key fields establish relationships with

* their target models. It provides the necessary information for Prisma to

* generate appropriate relation directives (@relation) in the schema,

* enabling proper relational data modeling and ORM functionality.

*

* The relation configuration is essential for:

*

* - Generating correct Prisma relation syntax

* - Establishing bidirectional relationships between models

* - Enabling proper type-safe querying through Prisma client

* - Supporting complex relationship patterns (1:1, 1:N, M:N)

*/

export interface IRelation {

/**

* Name of the relation property in the Prisma model.

*

* This becomes the property name used to access the related model instance

* through the Prisma client. Should be descriptive and reflect the business

* relationship being modeled.

*

* Examples:

*

* - "customer" for shopping_customer_id field

* - "channel" for shopping_channel_id field

* - "parent" for parent_id field in hierarchical structures

* - "snapshot" for versioning relationships

* - "article" for bbs_article_id field

*

* Naming convention: camelCase, descriptive of the relationship's business

* meaning

*/

name: string & CamelCasePattern;

/**

* Name of the target model being referenced by this relation.

*

* Must exactly match an existing model name in the schema. This is used by

* Prisma to establish the foreign key constraint and generate the

* appropriate relation mapping.

*

* Examples:

*

* - "shopping_customers" for customer relationships

* - "shopping_channels" for channel relationships

* - "bbs_articles" for article relationships

* - "attachment_files" for file attachments

*

* The target model should exist in the same schema or be accessible through

* the Prisma schema configuration.

*/

targetModel: string;

/**

* Name of the inverse relation property that will be generated in the

* target model.

*

* In Prisma's bidirectional relationships, both sides need relation

* properties. While {@link name} defines the property in the current model

* (the one with the foreign key), `oppositeName` defines the property that

* will be generated in the target model for back-reference.

*

* For 1:N relationships, the target model's property will be an array type.

* For 1:1 relationships (when the foreign key has unique constraint), it

* will be a singular reference.

*

* Examples (when this FK is defined in `bbs_article_comments` model):

*

* - Name: "article" → `comment.article` accesses the parent article

* - OppositeName: "comments" → `article.comments` accesses child comments

*

* More examples:

*

* - "sessions" for `users` model to access `user_sessions[]`

* - "snapshots" for `bbs_articles` model to access `bbs_article_snapshots[]`

* - "children" for self-referential hierarchies via `parent` relation

*

* Naming convention: camelCase, typically plural for 1:N relationships,

* singular for 1:1 relationships.

*/

oppositeName: string & CamelCasePattern;

/**

* Optional explicit mapping name for complex relationship scenarios.

*

* Used internally by Prisma to handle advanced relationship patterns such

* as:

*

* - Self-referential relationships with multiple foreign keys

* - Complex many-to-many relationships through junction tables

* - Relationships that require custom naming to avoid conflicts

*

* When not specified, Prisma automatically generates appropriate mapping

* names based on the model names and field names. This field should only be

* used when the automatic naming conflicts with other relationships or when

* specific naming is required for business logic.

*

* Examples:

*

* - "ParentChild" for hierarchical self-references

* - "CustomerOrder" for customer-order relationships

* - "UserProfile" for user-profile 1:1 relationships

*

* @internal This field is primarily used by the code generation system

* and should not be modified unless dealing with complex relationship patterns.

*/

mappingName?: string;

}

/**

* Interface representing a regular data field that stores business

* information.

*

* These fields contain the actual business data like names, amounts,

* timestamps, flags, descriptions, and other domain-specific information.

*/

export interface IPlainField {

/**

* Name of the field in the database table.

*

* MUST use snake_case naming convention. Common patterns from uploaded

* schemas:

*

* - Timestamps: created_at, updated_at, deleted_at, opened_at, closed_at

* - Identifiers: code, name, nickname, title

* - Business data: value, quantity, price, volume, balance

* - Flags: primary, required, exclusive, secret, multiplicative

*/

name: string & SnakeCasePattern;

/**

* Data type of the field for Prisma schema generation.

*

* Maps to appropriate Prisma/PostgreSQL types:

*

* - Boolean: Boolean flags and yes/no values

* - Int: Integer numbers, quantities, sequences

* - Double: Decimal numbers, prices, monetary values, percentages

* - String: Text data, names, descriptions, codes

* - Uri: URL/URI fields for links and references

* - Uuid: UUID fields (for non-foreign-key UUIDs)

* - Datetime: Timestamp fields with date and time

*/

type: "boolean" | "int" | "double" | "string" | "uri" | "uuid" | "datetime";

/**

* Description explaining the business purpose and usage of this field.

*

* Should clearly explain:

*

* - What business concept this field represents

* - Valid values or constraints if applicable

* - How it relates to business processes

* - Any special behavioral notes

*

* **IMPORTANT**: Description must be written in English. Example: "Amount

* of cash payment." or "Whether the unit is required or not."

*/

description: string;

/**

* Whether this field can contain null values.

*

* True: Field is optional and can be null (e.g., middle name, description)

* false: Field is required and cannot be null (e.g., creation timestamp,

* name) Reflects business rules about mandatory vs optional data.

*/

nullable: boolean;

}

/**

* Interface representing a unique index constraint on one or more fields.

*

* Unique indexes enforce data integrity by ensuring no duplicate values exist

* for the specified field combination. Essential for business rules that

* require uniqueness like email addresses, codes, or composite keys.

*/

export interface IUniqueIndex {

/**

* Array of field names that together form the unique constraint.

*

* Can be single field (e.g., ["email"]) or composite (e.g., ["channel_id",

* "code"]). All field names must exist in the model. Order matters for

* composite indexes. Examples: ["code"], ["shopping_channel_id",

* "nickname"], ["email"]

*/

fieldNames: string[] & tags.MinItems<1> & tags.UniqueItems;

/**

* Explicit marker indicating this is a unique index.

*

* Always true to distinguish from regular indexes. Used by code generator

* to emit "@@unique" directive in Prisma schema instead of "@@index".

*/

unique: true;

}

/**

* Interface representing a regular (non-unique) index for query performance.

*

* Regular indexes speed up database queries by creating optimized data

* structures for common search patterns. Essential for foreign keys, date

* ranges, and frequently filtered fields.

*/

export interface IPlainIndex {

/**

* Array of field names to include in the performance index.

*

* Can be single field (e.g., ["created_at"]) or composite (e.g.,

* ["customer_id", "created_at"]). All field names must exist in the model.

* Order matters for composite indexes and should match common query

* patterns. Examples: ["created_at"], ["shopping_customer_id",

* "created_at"], ["ip"]

*/

fieldNames: string[] & tags.MinItems<1> & tags.UniqueItems;

}

/**

* Interface representing a GIN (Generalized Inverted Index) for full-text

* search.

*

* GIN indexes enable advanced PostgreSQL text search capabilities including

* fuzzy matching and partial text search using trigram operations. Essential

* for user-facing search features on text content.

*/

export interface IGinIndex {

/**

* Name of the text field to index for full-text search capabilities.

*

* Must be a string field in the model that contains searchable text.

* Examples from uploaded schemas: "nickname", "title", "body", "name" Used

* with PostgreSQL gin_trgm_ops for trigram-based fuzzy text search.

*/

fieldName: string;

}

}undefined

import { tags } from "typia";

import { CamelCasePattern } from "../typings/CamelCasePattern";

/**

* AST type system for programmatic OpenAPI specification generation through AI

* function calling.

*

* This namespace defines a comprehensive Abstract Syntax Tree structure that

* enables AI agents to construct complete OpenAPI v3.1 specification documents

* at the AST level. Each type corresponds to specific OpenAPI specification

* constructs, allowing precise control over generated API documentation while

* maintaining type safety and business logic accuracy.

*

* LANGUAGE REQUIREMENT: All description fields throughout this type system

* (including operation descriptions, summaries, parameter descriptions, schema

* descriptions, etc.) MUST be written exclusively in English. This is a strict

* requirement for API documentation consistency and international

* compatibility.

*

* ## Core Purpose

*

* The system is designed for systematic generation where AI function calls

* build API specifications step-by-step, mapping business requirements to

* executable OpenAPI documents. Instead of generating raw OpenAPI JSON/YAML

* strings, AI agents construct structured AST objects that represent:

*

* - Complete REST API specifications with proper operation definitions

* - Comprehensive type schemas aligned with database structures

* - Detailed parameter and response body specifications

* - Consistent naming conventions and documentation standards

*

* ## Architecture Overview

*

* - **IDocument**: Root container representing the entire OpenAPI specification

* - **IOperation**: Individual API endpoints with HTTP methods, paths, and data

* structures

* - **IComponents**: Reusable schema definitions and security configurations

* - **IJsonSchema**: Type system following OpenAPI v3.1 JSON Schema specification

* - **Parameters & Bodies**: Request/response structures with validation rules

*

* ## OpenAPI v3.1 Compliance

*

* This implementation follows the OpenAPI v3.1 specification but is streamlined

* to:

*

* - Remove ambiguous and duplicated expressions for improved clarity

* - Enhance AI generation capabilities through simplified type structures

* - Maintain developer understanding with consistent patterns

* - Support comprehensive API documentation with detailed descriptions

*

* ## Design Principles

*

* The generated specifications follow enterprise-grade patterns:

*

* - Consistent RESTful API design with standard HTTP methods

* - Comprehensive CRUD operation coverage for all business entities

* - Type-safe request/response schemas with validation constraints

* - Detailed documentation with multi-paragraph descriptions

* - Reusable component schemas for maintainability and consistency

* - Security-aware design with authorization considerations

*

* Each generated OpenAPI document reflects real business workflows where API

* operations provide complete access to underlying data models, maintain proper

* REST conventions, and include comprehensive documentation suitable for

* developer consumption and automated tooling integration.

*

* @author Samchon

*/

export namespace AutoBeOpenApi {

/* -----------------------------------------------------------

DOCUMENT

----------------------------------------------------------- */

/**

* Document of the Restful API operations.

*

* This interface serves as the root document for defining Restful API

* {@link operations} and {@link components}. It corresponds to the top-level

* structure of an OpenAPI specification document, containing all API

* operations and reusable components.

*

* This simplified version focuses only on the operations and components,

* omitting other OpenAPI elements like info, servers, security schemes, etc.

* to keep the structure concise for AI-based code generation.

*

* IMPORTANT: When creating this document, you MUST ensure that:

*

* 1. The API operations and component schemas directly correspond to the

* database schema

* 2. All entity types and their properties reference and incorporate the

* description comments from the related database schema tables and

* columns

* 3. Descriptions are detailed and organized into multiple paragraphs

* 4. The API fully represents all entities and relationships defined in the

* database schema

* 5. EVERY SINGLE TABLE in the database schema MUST have corresponding API

* operations for CRUD actions (Create, Read, Update, Delete) as

* applicable

* 6. NO TABLE should be omitted from the API design - all tables require API

* coverage

*

* ## Type Naming Conventions

*

* When defining component schemas, follow these naming conventions for

* consistency:

*

* - **Main Entity Types**: Use `IEntityName` format for main entity with

* detailed information (e.g., `IShoppingSale`)

*

* - These MUST directly correspond to entity tables in the database schema

* - Their descriptions MUST incorporate the table description comments from the

* database schema

* - Each property MUST reference the corresponding column description from the

* database schema

* - Entity types should represent the full, detailed version of domain entities

* - **Related Operation Types**: Use `IEntityName.IOperation` format with these

* common suffixes:

*

* - `IEntityName.ICreate`: Request body for creation operations (POST)

*

* - Should include all required fields from the database schema entity

* - `IEntityName.IUpdate`: Request body for update operations (PUT)

*

* - Should include updatable fields as defined in the database schema

* - `IEntityName.ISummary`: Simplified response version with essential

* properties

* - `IEntityName.IRequest`: Request parameters for list operations (often with

* search/pagination)

* - `IEntityName.IInvert`: Alternative view of an entity from a different

* perspective

* - `IPageIEntityName`: Paginated results container with `pagination` and

* `data` properties

*

* These consistent naming patterns create a predictable and self-documenting

* API that accurately reflects the underlying database schema, making it

* easier for developers to understand the purpose of each schema and its

* relationship to the database model.

*/

export interface IDocument {

/**

* List of API operations.

*

* This array contains all the API endpoints with their HTTP methods,

* descriptions, parameters, request/response structures, etc. Each

* operation corresponds to an entry in the paths section of an OpenAPI

* document.

*

* CRITICAL: This array MUST include operations for EVERY TABLE defined in

* the database schema. The AI generation MUST NOT skip or omit any tables

* when creating operations. The operations array MUST be complete and

* exhaustive, covering all database entities without exception.

*

* IMPORTANT: For each API operation, ensure that:

*

* 1. EVERY independent entity table in the database schema has corresponding

* API operations for basic CRUD functions (at minimum)

* 2. ALL TABLES from the database schema MUST have at least one API operation,

* no matter how many tables are in the schema

* 3. DO NOT STOP generating API operations until ALL tables have been

* addressed

* 4. The description field refers to and incorporates the description comments

* from the related DB schema tables and columns

* 5. The description must be VERY detailed and organized into MULTIPLE

* PARAGRAPHS separated by line breaks, not just a single paragraph

* 6. The description should explain the purpose, functionality, and any

* relationships to other entities in the database schema

*

* Note that, combination of {@link AutoBeOpenApi.IOperation.path} and

* {@link AutoBeOpenApi.IOperation.method} must be unique.

*

* Also, never forget any specification that is listed on the requirement

* analysis report and DB design documents. Every feature must be

* implemented in the API operations.

*

* @minItems 1

*/

operations: AutoBeOpenApi.IOperation[];

/**

* Reusable components of the API operations.

*

* This contains schemas, parameters, responses, and other reusable elements

* referenced throughout the API operations. It corresponds to the

* components section in an OpenAPI document.

*

* CRITICAL: Components MUST include type definitions for EVERY TABLE in the

* database schema. The AI generation process MUST create schema components

* for ALL database entities without exception, regardless of how many

* tables are in the database.

*

* IMPORTANT: For all component types and their properties:

*

* 1. EVERY component MUST have a detailed description that references the

* corresponding database schema table's description comments

* 2. EACH property within component types MUST have detailed descriptions that

* reference the corresponding column description comments in the

* database schema

* 3. All descriptions MUST be organized into MULTIPLE PARAGRAPHS (separated by

* line breaks) based on different aspects of the entity

* 4. Descriptions should be comprehensive enough that anyone who reads them

* can understand the purpose, functionality, and relationships of the

* type

* 5. ALL TABLES from the database schema MUST have corresponding schema

* components, no matter how many tables are in the schema

*

* All request and response bodies must reference named types defined in

* this components section. This ensures consistency and reusability across

* the API.

*

* ## Type Naming Conventions in Components

*

* When defining schema components, follow these standardized naming

* patterns for consistency and clarity:

*

* ### Main Entity Types

*

* - `IEntityName`

*

* - Primary entity objects (e.g., `IShoppingSale`, `IShoppingOrder`)

* - These represent the full, detailed version of domain entities

*

* ### Operation-Specific Types

*

* - `IEntityName.ICreate`

*

* - Request body schemas for creation operations (POST)

* - Contains all fields needed to create a new entity

* - `IEntityName.IUpdate`

*

* - Request body schemas for update operations (PUT)

* - Contains fields that can be modified on an existing entity

* - `IEntityName.IRequest`

*

* - Parameters for search/filter/pagination in list operations

* - Often contains `search`, `sort`, `page`, and `limit` properties

*

* ### View Types

*

* - `IEntityName.ISummary`

*

* - Simplified view of entities for list operations

* - Contains essential properties only, omitting detailed nested objects

* - `IEntityName.IAbridge`: Intermediate view with more details than Summary

* but less than full entity

* - `IEntityName.IInvert`: Alternative representation of an entity from a

* different perspective

*

* ### Container Types

*

* - `IPageIEntityName`

*

* - Paginated results container

* - Usually contains `pagination` and `data` properties

*

* These naming conventions create a self-documenting API where the purpose

* of each schema is immediately clear from its name. This helps both

* developers and AI tools understand and maintain the API structure.

*/

components: AutoBeOpenApi.IComponents;

}

/**

* Operation of the Restful API.

*

* This interface defines a single API endpoint with its HTTP {@link method},

* {@link path}, {@link parameters path parameters},

* {@link requestBody request body}, and {@link responseBody} structure. It

* corresponds to an individual operation in the paths section of an OpenAPI

* document.

*

* Each operation requires a detailed explanation of its purpose through the

* reason and description fields, making it clear why the API was designed and

* how it should be used.

*

* All request bodies and responses for this operation must be object types

* and must reference named types defined in the components section. The

* content-type is always `application/json`. For file upload/download

* operations, use `string & tags.Format<"uri">` in the appropriate schema

* instead of binary data formats.

*

* In OpenAPI, this might represent:

*

* ```json

* {

* "/shoppings/customers/orders": {

* "post": {

* "description": "Create a new order application from shopping cart...",

* "parameters": [...],

* "requestBody": {...},

* "responses": {...},

* ...

* }

* }

* }

* ```

*/

export interface IOperation extends IEndpoint {

/**

* Implementation specification for the API operation.

*

* This is an AutoBE-internal field (not exposed in standard OpenAPI output)

* that provides detailed implementation guidance for downstream agents

* (Realize Agent, Test Agent, etc.).

*

* Include **HOW** this operation should be implemented:

*

* - Service layer logic and algorithm

* - Database queries and transactions involved

* - Business rules and validation logic

* - Edge cases and error handling

* - Integration with other services

*

* This field complements the `description` field: while `description` is

* for API consumers (Swagger UI, SDK docs), `specification` is for agents

* that implement the operation.

*

* > MUST be written in English. Never use other languages.

*/

specification: string;

/**

* Detailed description about the API operation.

*

* IMPORTANT: This field MUST be extensively detailed and MUST reference the

* description comments from the related database schema tables and columns.

* The description should be organized into MULTIPLE PARAGRAPHS separated by

* line breaks to improve readability and comprehension.

*

* For example, include separate paragraphs for:

*

* - The purpose and overview of the API operation

* - Security considerations and user permissions

* - Relationship to underlying database entities

* - Validation rules and business logic

* - Related API operations that might be used together with this one

* - Expected behavior and error handling

*

* When writing the description, be sure to incorporate the corresponding DB

* schema's description comments, matching the level of detail and style of

* those comments. This ensures consistency between the API documentation

* and database structure.

*

* If there's a dependency to other APIs, please describe the dependency API

* operation in this field with detailed reason. For example, if this API

* operation needs a pre-execution of other API operation, it must be

* explicitly described.

*

* - `GET /shoppings/customers/sales` must be pre-executed to get entire list

* of summarized sales. Detailed sale information would be obtained by

* specifying the sale ID in the path parameter.

*

* **CRITICAL WARNING about soft delete keywords**: DO NOT use terms like

* "soft delete", "soft-delete", or similar variations in this description

* UNLESS the operation actually implements soft deletion. These keywords

* trigger validation logic that expects a corresponding soft_delete_column

* to be specified. Only use these terms when you intend to implement soft

* deletion (marking records as deleted without removing them from the

* database).

*

* Example of problematic description: ❌ "This would normally be a

* soft-delete, but we intentionally perform permanent deletion here" - This

* triggers soft delete validation despite being a hard delete operation.

*

* > MUST be written in English. Never use other languages.

*/

description: string;

/**

* Authorization type of the API operation.

*

* - `"login"`: User login operations that validate credentials

* - `"join"`: User registration operations that create accounts

* - `"refresh"`: Token refresh operations that renew access tokens

* - `null`: All other operations (CRUD, business logic, etc.)

*

* Use authentication values only for credential validation, user

* registration, or token refresh operations. Use `null` for all other

* business operations.

*

* Examples:

*

* - `/auth/login` → `"login"`

* - `/auth/register` → `"join"`

* - `/auth/refresh` → `"refresh"`

* - `/auth/validate` → `null`

* - `/users/{id}`, `/shoppings/customers/sales/cancel`, → `null`

*/

authorizationType: "login" | "join" | "refresh" | null;

/**

* List of path parameters.

*

* Note that, the {@link AutoBeOpenApi.IParameter.name identifier name} of

* path parameter must be corresponded to the

* {@link path API operation path}.

*

* For example, if there's an API operation which has {@link path} of

* `/shoppings/customers/sales/{saleId}/questions/${questionId}/comments/${commentId}`,

* its list of {@link AutoBeOpenApi.IParameter.name path parameters} must be

* like:

*

* - `saleId`

* - `questionId`

* - `commentId`

*/

parameters: AutoBeOpenApi.IParameter[];

/**

* Request body of the API operation.

*

* Defines the payload structure for the request. Contains a description and

* schema reference to define the expected input data.

*

* Should be `null` for operations that don't require a request body, such

* as most "get" operations.

*/

requestBody: AutoBeOpenApi.IRequestBody | null;

/**

* Response body of the API operation.

*

* Defines the structure of the successful response data. Contains a

* description and schema reference for the returned data.

*

* Should be null for operations that don't return any data.

*/

responseBody: AutoBeOpenApi.IResponseBody | null;

/**

* Authorization actor required to access this API operation.

*

* This field specifies which user actor is allowed to access this endpoint.

* The actor name must correspond exactly to the actual actors defined in

* your system's database schema.

*

* ## Naming Convention

*

* Actor names MUST use camelCase.

*

* ## Actor-Based Path Convention

*

* When authorizationActor is specified, it should align with the path

* structure:

*

* - If authorizationActor is "admin" → path might be "/admin/resources/{id}"

* - If authorizationActor is "seller" → path might be "/seller/products"

* - Special case: For user's own resources, use path prefix "/my/" regardless

* of actor

*

* ## Important Guidelines

*

* - Set to `null` for public endpoints that require no authentication

* - Set to specific actor string for actor-restricted endpoints

* - The actor name MUST match exactly with the user type/actor defined in the

* database

* - This actor will be used by the Realize Agent to generate appropriate

* decorator and authorization logic in the provider functions

* - The controller will apply the corresponding authentication decorator

* based on this actor

*

* ## Examples

*

* - `null` - Public endpoint, no authentication required

* - `"user"` - Any authenticated user can access

* - `"admin"` - Only admin users can access

* - `"seller"` - Only seller users can access

* - `"moderator"` - Only moderator users can access

*

* Note: The actual authentication/authorization implementation will be

* handled by decorators at the controller level, and the provider function

* will receive the authenticated user object with the appropriate type.

*/

authorizationActor: (string & CamelCasePattern & tags.MinLength<1>) | null;

/**

* Functional name of the API endpoint.

*

* This is a semantic identifier that represents the primary function or

* purpose of the API endpoint. It serves as a canonical name that can be

* used for code generation, SDK method names, and internal references.

*

* ## Reserved Word Restrictions

*

* CRITICAL: The name MUST NOT be a TypeScript/JavaScript reserved word, as

* it will be used as a class method name in generated code. Avoid names

* like:

*

* - `delete`, `for`, `if`, `else`, `while`, `do`, `switch`, `case`, `break`

* - `continue`, `function`, `return`, `with`, `in`, `of`, `instanceof`

* - `typeof`, `void`, `var`, `let`, `const`, `class`, `extends`, `import`

* - `export`, `default`, `try`, `catch`, `finally`, `throw`, `new`

* - `super`, `this`, `null`, `true`, `false`, `async`, `await`

* - `yield`, `static`, `private`, `protected`, `public`, `implements`

* - `interface`, `package`, `enum`, `debugger`

*

* Instead, use alternative names for these operations:

*

* - Use `erase` instead of `delete`

* - Use `iterate` instead of `for`

* - Use `when` instead of `if`

* - Use `cls` instead of `class`

*

* ## Standard Endpoint Names

*

* Use these conventional names based on the endpoint's primary function:

*

* - **`index`**: List/search operations that return multiple entities

*

* - Typically used with PATCH method for complex queries

* - Example: `PATCH /users` → `name: "index"`

* - **`at`**: Retrieve a specific entity by identifier

*

* - Typically used with GET method on single resource

* - Example: `GET /users/{userId}` → `name: "at"`

* - **`create`**: Create a new entity

*

* - Typically used with POST method

* - Example: `POST /users` → `name: "create"`

* - **`update`**: Update an existing entity

*

* - Typically used with PUT method

* - Example: `PUT /users/{userId}` → `name: "update"`

* - **`erase`**: Delete/remove an entity (NOT `delete` - reserved word!)

*

* - Typically used with DELETE method

* - Example: `DELETE /users/{userId}` → `name: "erase"`

*

* ## Custom Endpoint Names

*

* For specialized operations beyond basic CRUD, use descriptive verbs:

*

* - **`activate`**: Enable or turn on a feature/entity

* - **`deactivate`**: Disable or turn off a feature/entity

* - **`approve`**: Approve a request or entity

* - **`reject`**: Reject a request or entity

* - **`publish`**: Make content publicly available

* - **`archive`**: Move to archived state

* - **`restore`**: Restore from archived/deleted state

* - **`duplicate`**: Create a copy of an entity

* - **`transfer`**: Move ownership or change assignment

* - **`validate`**: Validate data or state

* - **`process`**: Execute a business process or workflow

* - **`export`**: Generate downloadable data

* - **`import`**: Process uploaded data

*

* ## Naming Guidelines

*

* - MUST use camelCase naming convention

* - Use singular verb forms

* - Be concise but descriptive

* - Avoid abbreviations unless widely understood

* - Ensure the name clearly represents the endpoint's primary action

* - For nested resources, focus on the action rather than hierarchy

* - NEVER use JavaScript/TypeScript reserved words

*

* Valid Examples:

*

* - `index`, `create`, `update`, `erase` (single word)

* - `updatePassword`, `cancelOrder`, `publishArticle` (camelCase)

* - `validateEmail`, `generateReport`, `exportData` (camelCase)

*

* Invalid Examples:

*

* - `update_password` (snake_case not allowed)

* - `UpdatePassword` (PascalCase not allowed)

* - `update-password` (kebab-case not allowed)

*

* Path to Name Examples:

*

* - `GET /shopping/orders/{orderId}/items` → `name: "index"` (lists items)

* - `POST /shopping/orders/{orderId}/cancel` → `name: "cancel"`

* - `PUT /users/{userId}/password` → `name: "updatePassword"`

*

* ## Uniqueness Rule

*

* The `name` must be unique within the API's accessor namespace. The

* accessor is formed by combining the path segments (excluding parameters)

* with the operation name.

*

* Accessor formation:

*

* 1. Extract non-parameter segments from the path (remove `{...}` parts)

* 2. Join segments with dots

* 3. Append the operation name

*

* Examples:

*

* - Path: `/shopping/sale/{saleId}/review/{reviewId}`, Name: `at` → Accessor:

* `shopping.sale.review.at`

* - Path: `/users/{userId}/posts`, Name: `index` → Accessor:

* `users.posts.index`

* - Path: `/auth/login`, Name: `signIn` → Accessor: `auth.login.signIn`

*

* Each accessor must be globally unique across the entire API. This ensures

* operations can be uniquely identified in generated SDKs and prevents

* naming conflicts.

*/

name: string & CamelCasePattern;

/**

* Prerequisites for this API operation.

*

* The `prerequisites` field defines API operations that must be

* successfully executed before this operation can be performed. This

* creates an explicit dependency chain between API endpoints, ensuring

* proper execution order and data availability.

*

* ## CRITICAL WARNING: Authentication Prerequisites

*

* **NEVER include authentication-related operations as prerequisites!**

* Authentication is handled separately through the `authorizationActor`

* field and should NOT be part of the prerequisite chain. Do NOT add

* prerequisites for:

*

* - Login endpoints

* - Token validation endpoints

* - User authentication checks

* - Permission verification endpoints

*

* Prerequisites are ONLY for business logic dependencies, NOT for

* authentication/authorization.

*

* ## Purpose and Use Cases

*

* Prerequisites are essential for operations that depend on:

*

* 1. **Existence Validation**: Ensuring resources exist before manipulation

* 2. **State Requirements**: Verifying resources are in the correct state

* 3. **Data Dependencies**: Loading necessary data for the current operation

* 4. **Business Logic Constraints**: Enforcing domain-specific rules

*

* ## Execution Flow

*

* When an operation has prerequisites:

*

* 1. Each prerequisite API must be called first in the specified order

* 2. Prerequisites must return successful responses (2xx status codes)

* 3. Only after all prerequisites succeed can the main operation proceed

* 4. If any prerequisite fails, the operation should not be attempted

*

* ## Common Patterns

*

* ### Resource Existence Check

*

* ```typescript

* // Before updating an order item, ensure the order exists

* prerequisites: [

* {

* endpoint: { path: "/orders/{orderId}", method: "get" },

* description: "Order must exist in the system",

* },

* ];

* ```

*

* ### State Validation

*

* ```typescript

* // Before processing payment, ensure order is in correct state

* prerequisites: [

* {

* endpoint: { path: "/orders/{orderId}/status", method: "get" },

* description: "Order must be in 'pending_payment' status",

* },

* ];

* ```

*

* ### Hierarchical Dependencies

*

* ```typescript

* // Before accessing a deeply nested resource

* prerequisites: [

* {

* endpoint: { path: "/projects/{projectId}", method: "get" },

* description: "Project must exist",

* },

* {

* endpoint: {

* path: "/projects/{projectId}/tasks/{taskId}",

* method: "get",

* },

* description: "Task must exist within the project",

* },

* ];

* ```

*

* ## Important Guidelines

*

* 1. **Order Matters**: Prerequisites are executed in array order

* 2. **Parameter Inheritance**: Path parameters from prerequisites can be used

* in the main operation

* 3. **Error Handling**: Failed prerequisites should prevent main operation

* 4. **Performance**: Consider caching prerequisite results when appropriate

* 5. **Documentation**: Each prerequisite must have a clear description

* explaining why it's required

* 6. **No Authentication**: NEVER use prerequisites for authentication checks

*

* ## Test Generation Impact

*

* The Test Agent uses prerequisites to:

*

* - Generate proper test setup sequences

* - Create valid test data in the correct order

* - Ensure test scenarios follow realistic workflows

* - Validate error handling when prerequisites fail

*

* @see {@link IPrerequisite} for the structure of each prerequisite

*/

prerequisites: IPrerequisite[];

/**

* Accessor of the operation.

*

* If you configure this property, the assigned value will be used as

* {@link IHttpMigrateRoute.accessor}. Also, it can be used as the

* {@link IHttpLlmFunction.name} by joining with `.` character in the LLM

* function calling application.

*

* Note that the `x-samchon-accessor` value must be unique in the entire

* OpenAPI document operations. If there are duplicated `x-samchon-accessor`

* values, {@link IHttpMigrateRoute.accessor} will ignore all duplicated

* `x-samchon-accessor` values and generate the

* {@link IHttpMigrateRoute.accessor} by itself.

*

* @internal

*/

accessor?: string[] | undefined;

}

/**

* Authorization - Authentication and user type information

*

* This field defines how the API authenticates the request and restricts

* access to specific user types.

*

* ✅ Only the `Authorization` HTTP header is used for authentication. The

* expected format is:

*

* Authorization: Bearer <access_token>

*

* The token must be a bearer token (e.g., JWT or similar), and when parsed,

* it is guaranteed to include at least the authenticated actor's `id` field.

* No other headers or cookie-based authentication methods are supported.

*/

export interface IAuthorization {

/**

* Allowed user types for this API

*

* Specifies which user types are permitted to access this API.

*

* This is not a permission level or access control actor. Instead, it

* describes **who** the user is — their type within the service's domain

* model. It must correspond 1:1 with how the user is represented in the

* database.

*

* MUST use camelCase naming convention.

*

* ⚠️ Important: Each `actor` must **exactly match a table name defined in

* the database schema**. This is not merely a convention or example — it is

* a strict requirement.

*

* A valid actor must meet the following criteria:

*

* - It must uniquely map to a user group at the database level, represented

* by a dedicated table.

* - It must not overlap semantically with other actors — for instance, both

* `admin` and `administrator` must not exist to describe the same type.

*

* Therefore, if a user type cannot be clearly and uniquely distinguished in

* the database, It **cannot** be used as a valid `actor` here.

*/

name: string & CamelCasePattern;

/**

* Detailed description of the authorization actor

*

* Provides a comprehensive explanation of:

*

* - The purpose and scope of this authorization actor

* - Which types of users are granted this actor

* - What capabilities and permissions this actor enables

* - Any constraints or limitations associated with the actor

* - How this actor relates to the underlying database schema

* - Examples of typical use cases for this actor

*

* This description should be detailed enough for both API consumers to

* understand the actor's purpose and for the system to properly enforce

* access controls.

*

* > MUST be written in English. Never use other languages.

*/

description: string;

}

/**

* Path parameter information for API routes.

*

* This interface defines a path parameter that appears in the URL of an API

* endpoint. Path parameters are enclosed in curly braces in the

* {@link AutoBeOpenApi.IOperation.path operation path} and must be defined

* with their types and descriptions.

*

* For example, if API operation path is

* `/shoppings/customers/sales/{saleId}/questions/${questionId}/comments/${commentId}`,

* the path parameters should be like below:

*

* ```json

* {

* "path": "/shoppings/customers/sales/{saleId}/questions/${questionId}/comments/${commentId}",

* "method": "get",

* "parameters": [

* {

* "name": "saleId",

* "in": "path",

* "schema": { "type": "string", "format": "uuid" },

* "description": "Target sale's ID"

* },

* {

* "name": "questionId",

* "in": "path",

* "schema": { "type": "string", "format": "uuid" },

* "description": "Target question's ID"

* },

* {

* "name": "commentId",

* "in": "path",

* "schema": { "type": "string", "format": "uuid" },

* "description": "Target comment's ID"

* }

* ]

* }

* ```

*/

export interface IParameter {

/**

* Description about the path parameter.

*

* This is the standard OpenAPI description field that will be displayed in

* Swagger UI, SDK documentation, and other API documentation tools. Write a

* short, concise, and clear description that helps API consumers understand

* what this parameter represents.

*

* Implementation details for parameter handling are covered in the parent

* {@link IOperation.specification} field.

*

* > MUST be written in English. Never use other languages.

*/

description: string;

/**

* Identifier name of the path parameter.

*

* This name must match exactly with the parameter name in the route path.

* It must be corresponded to the

* {@link AutoBeOpenApi.IOperation.path API operation path}.

*

* MUST use camelCase naming convention.

*/

name: string & CamelCasePattern;

/**

* Type schema of the path parameter.

*

* Path parameters are typically primitive types like

* {@link AutoBeOpenApi.IJsonSchema.IString strings},

* {@link AutoBeOpenApi.IJsonSchema.IInteger integers},

* {@link AutoBeOpenApi.IJsonSchema.INumber numbers}.

*

* If you need other types, please use request body instead with object type

* encapsulation.

*/

schema:

| AutoBeOpenApi.IJsonSchema.IInteger

| AutoBeOpenApi.IJsonSchema.INumber

| AutoBeOpenApi.IJsonSchema.IString;

}

/**

* Request body information of OpenAPI operation.

*

* This interface defines the structure for request bodies in API routes. It

* corresponds to the requestBody section in OpenAPI specifications, providing

* both a description and schema reference for the request payload.

*

* The content-type for all request bodies is always `application/json`. Even

* when file uploading is required, don't use `multipart/form-data` or

* `application/x-www-form-urlencoded` content types. Instead, just define an

* URI string property in the request body schema.

*

* Note that, all body schemas must be transformable to a

* {@link AutoBeOpenApi.IJsonSchema.IReference reference} type defined in the

* {@link AutoBeOpenApi.IComponents.schemas components section} as an

* {@link AutoBeOpenApi.IJsonSchema.IObject object} type.

*

* In OpenAPI, this might represent:

*

* ```json

* {

* "requestBody": {

* "description": "Creation info of the order",

* "content": {

* "application/json": {

* "schema": {

* "$ref": "#/components/schemas/IShoppingOrder.ICreate"

* }

* }

* }

* }

* }

* ```

*/

export interface IRequestBody {

/**

* Description about the request body.

*

* Make short, concise and clear description about the request body.

*

* > MUST be written in English. Never use other languages.

*/

description: string;

/**

* Request body type name.

*

* This specifies the data structure expected in the request body, that will

* be transformed to {@link AutoBeOpenApi.IJsonSchema.IReference reference}

* type in the {@link AutoBeOpenApi.IComponents.schemas components section}

* as an {@link AutoBeOpenApi.IJsonSchema.Object object} type.

*

* Here is the naming convention for the request body type:

*

* - `IEntityName.ICreate`: Request body for creation operations (POST)

* - `IEntityName.IUpdate`: Request body for update operations (PUT)

* - `IEntityName.IRequest`: Request parameters for list operations (often

* with search/pagination)

*

* What you write:

*

* ```json

* {

* "typeName": "IShoppingOrder.ICreate"

* }

* ```

*

* Transformed to:

*

* ```json

* {

* "schema": {

* "$ref": "#/components/schemas/IShoppingOrder.ICreate"

* }

* }

* ```

*/

typeName: string;

}

/**

* Response body information for OpenAPI operation.

*

* This interface defines the structure of a successful response from an API

* operation. It provides a description of the response and a schema reference

* to define the returned data structure.

*

* The content-type for all responses is always `application/json`. Even when

* file downloading is required, don't use `application/octet-stream` or

* `multipart/form-data` content types. Instead, just define an URI string

* property in the response body schema.

*

* In OpenAPI, this might represent:

*

* ```json

* {

* "responses": {

* "200": {

* "description": "Order information",

* "content": {

* "application/json": {

* "schema": { "$ref": "#/components/schemas/IShoppingOrder" }

* }

* }

* }

* }

* }

* ```

*/

export interface IResponseBody {

/**

* Description about the response body.

*

* Make short, concise and clear description about the response body.

*

* > MUST be written in English. Never use other languages.

*/

description: string;

/**

* Response body's data type.

*

* Specifies the structure of the returned data (response body), that will

* be transformed to {@link AutoBeOpenApi.IJsonSchema.IReference} type in the

* {@link AutoBeOpenApi.IComponents.schemas components section} as an

* {@link AutoBeOpenApi.IJsonSchema.IObject object} type.

*

* Here is the naming convention for the response body type:

*

* - `IEntityName`: Main entity with detailed information (e.g.,

* `IShoppingSale`)

* - `IEntityName.ISummary`: Simplified response version with essential

* properties

* - `IEntityName.IInvert`: Alternative view of an entity from a different

* perspective

* - `IPageIEntityName`: Paginated results container with `pagination` and

* `data` properties

*

* What you write:

*

* ```json

* {

* "typeName": "IShoppingOrder"

* }

* ```

*

* Transformed to:

*

* ```json

* {

* "schema": {

* "$ref": "#/components/schemas/IShoppingOrder"

* }

* }

* ```

*/

typeName: string;

}

/* -----------------------------------------------------------

JSON SCHEMA

----------------------------------------------------------- */

/**

* Reusable components in OpenAPI.

*

* A storage of reusable components in OpenAPI document.

*

* In other words, it is a storage of named DTO schemas and security schemes.

*/

export interface IComponents {

/**

* An object to hold reusable DTO schemas.

*

* In other words, a collection of named JSON schemas.

*

* IMPORTANT: For each schema in this collection:

*

* 1. EVERY schema MUST have a detailed description that references and aligns

* with the description comments from the corresponding database schema

* tables

* 2. EACH property within the schema MUST have detailed descriptions that

* reference and align with the description comments from the

* corresponding DB schema columns

* 3. All descriptions MUST be organized into MULTIPLE PARAGRAPHS (separated by

* line breaks) when appropriate

* 4. Descriptions should be comprehensive enough that anyone reading them can

* understand the purpose, functionality, and constraints of each type

* and property without needing to reference other documentation

*/

schemas: Record<string, IJsonSchemaDescriptive>;

/** Whether includes `Authorization` header or not. */

authorizations: IAuthorization[];

}

/**

* Type schema info.

*

* `AutoBeOpenApi.IJsonSchema` is a type schema info of the OpenAPI

* Generative.

*

* `AutoBeOpenApi.IJsonSchema` basically follows the JSON schema specification

* of OpenAPI v3.1, but a little bit shrunk to remove ambiguous and duplicated

* expressions of OpenAPI v3.1 for the convenience, clarity, and AI

* generation.

*

* ## CRITICAL: Union Type Expression

*

* In this type system, union types (including nullable types) MUST be

* expressed using the `IOneOf` structure. NEVER use array notation in the

* `type` field.

*

* ❌ **FORBIDDEN** - Array notation in type field:

*

* ```typescript

* {

* "type": ["string", "null"] // NEVER DO THIS!

* }

* ```

*

* ✅ **CORRECT** - Using IOneOf for unions:

*

* ```typescript

* // For nullable string:

* {

* oneOf: [{ type: "string" }, { type: "null" }];

* }

*

* // For string | number union:

* {

* oneOf: [{ type: "string" }, { type: "number" }];

* }

* ```

*

* The `type` field in any schema object is a discriminator that identifies

* the schema type and MUST contain exactly one string value.

*/

export type IJsonSchema =

| IJsonSchema.IConstant

| IJsonSchema.IBoolean

| IJsonSchema.IInteger

| IJsonSchema.INumber

| IJsonSchema.IString

| IJsonSchema.IArray

| IJsonSchema.IObject

| IJsonSchema.IReference

| IJsonSchema.IOneOf

| IJsonSchema.INull;

export namespace IJsonSchema {

/** Constant value type. */

export interface IConstant {

/** The constant value. */

const: boolean | number | string;

}

/** Boolean type info. */

export interface IBoolean extends ISignificant<"boolean"> {}

/** Integer type info. */

export interface IInteger extends ISignificant<"integer"> {

/**

* Minimum value restriction.

*

* @type int64

*/

minimum?: number;

/**

* Maximum value restriction.

*

* @type int64

*/

maximum?: number;

/**

* Exclusive minimum value restriction.

*

* @type int64

*/

exclusiveMinimum?: number;

/**

* Exclusive maximum value restriction.

*

* @type int64

*/

exclusiveMaximum?: number;

/**